BOBBY YEAH

Posted in: Animation

The adventures of a petty thug. Official selection, Sundance Film Festival 2012. BAFTA Nomination: Best Animated Short 2012. More stuff here: www.facebook.com/robertmorganfilms

The adventures of a petty thug. Official selection, Sundance Film Festival 2012. BAFTA Nomination: Best Animated Short 2012. More stuff here: www.facebook.com/robertmorganfilms

See the latest progress made at Apple Campus 2. Featuring stunning shots of the “spaceship”, auditorium, r&d center, and more. Landscaping and other smaller structures are beginning to pop up throughout the campus.

Recorded using a DJI Phantom 3 Professional.

http://amzn.to/1Ye3GKO

Edited by Matthew Roberts

Watch All Apple Campus Updates:

July 2016 : https://youtu.be/V8W33JxjIAw

June 2016: https://youtu.be/8onw-9psueE

May 2016: https://youtu.be/VUs_YrLvA0k

April 2016: https://youtu.be/jn09eBljAzs

March 2016: https://youtu.be/2bwoJJIyB_4

To use this video in a commercial player or in broadcasts, please email MRVideography@gmail.com

All Local Regulations Were Followed at the Time of Filming.

Dear world,

Let´s talk about time.

When I uploaded my first YouTube video 7 years ago, I would have never thought that it would get that much attention. Had lots of discussions, met new people, continued to make videos about things that intrigued me. Or tried out effects.

I enjoyed it a lot, and I still read every comment that pops up. And they keep coming in. It is a hobby that I am very glad about having started. And I am humbled by the attention.

Well, as time went by I found less time to work on videos, struggled with other things in life, and wondered if I would ever find the time again.

But I always knew one thing:

I owe you something. All the time people were are asking about a sequel to starsize comparison. And yes, I promised once.

I keep my promises. So, whenever I found time over the last year I spent it on that. Here it is.

I hope you like it. I tried to do it in a bit different way. Curious for the feedback. I know I will be hardly able to beat the choice of music from the first part, but let me say, Vangelis Alpha is a piece that is very dear to my heart, I always had this in mind.

Still looking for contact to musicians.

I do not know what the future brings, but I hope we will hear from each other. Enjoy.

Ce clip est le film non officiel du titre de Fakear, Song For Jo, qui lui est proposé. Ask yourselves, is a 5.30am wake up worth a sunrise over the horizon? Fakear’s Song for Jo inspired this piece of film around warmth, peacefulness and rumination. Download / Listen to Fakear’s first album “Animal” http://fakear.lnk.to/AnimalAlbum Directed and edited by Clément Lefer www.clementlefer.com Produced by Metrolab Productions https://www.facebook.com/Metrolab-Productions-1525294607751916/?fref=ts Special thanks to Raphaël Botton, Rudy Acoca and Leucate Plage.

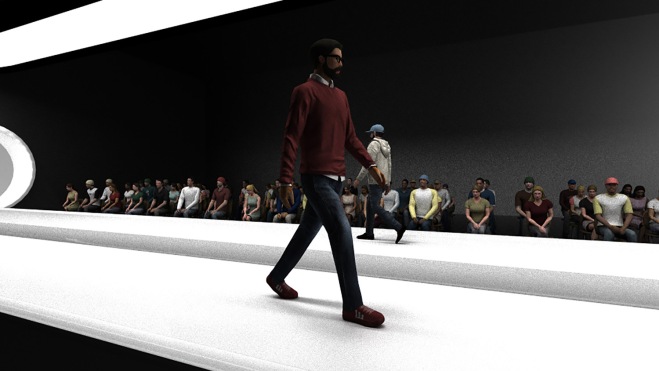

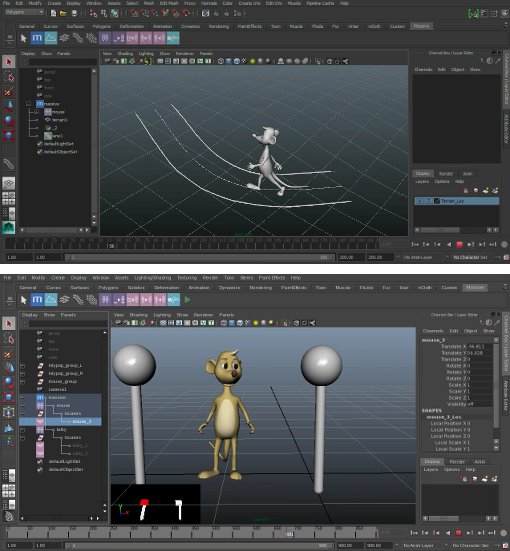

When Massive (Multiple Agent Simulation System in Virtual Environment) was first revealed to the world in 2001 as part of the release of Peter Jackson’s Lord of the Rings films, it stunned audiences – and the visual effects community – with its use of fuzzy logic to aid in crowd animations. Developed at Weta Digital by Stephen Regelous, Massive then became a product on its own. Regelous has continued to build and grow Massive and recently at SIGGRAPH he showed off a new Parts Library and the new 3ds Max integration. vfxblog sat down for a demo of the latest software and a chat with Regelous about the state of AI and where Massive is ‘at’, particularly in an environment with new crowd simulation competitors and perhaps new opportunities away from film and TV.

vfxblog: What does the code base or the software of Massive look like compared to when you wrote it for Lord of the Rings?

Stephen Regelous: It’s exactly the same. I mean, it was a couple hundred thousand lines of code when I’d finished at Weta, and it’s now about half a million lines of code but that couple hundred thousand lines of code is still in there.

vfxblog: So the idea of this brain and crowd agents and everything like that is still, that’s the central tenet?

Stephen Regelous: It’s exactly the same, in fact some, we often get questions like, ‘So, when you finished with Lord of the Rings how did you deal with this whole new thing?’ It wasn’t a new thing because I’d had that in mind right from the start. Even when we were at Weta and I’d got the simulations working and then it was time to start looking at how we were going to do shots with it, the management was saying, ‘Okay well now we’ve got to figure out what the UI is for doing shots?’ I said, ‘Here it is.’ Because I’d done that right from the start. I was planning for it to be used in production, I was planning for it to then go on to other studios, so that was always there.

vfxblog: What were people doing, do you remember, before crowd simulation software became popular?

Stephen Regelous: Well, hand animation was always an option, and believe it or not it’s still used to this day. But the more common option for crowds was to do a particle animation and then either stick cards on them, which was not really the favored option, or to stick pre-baked cycles onto it.

vfxblog: There’s now several competitors to Massive in the crowd simulation space – what do you think are Massive’s strengths compared to some of the other tools?

Stephen Regelous: Well, the biggest difference, certainly between Massive and Golaem, for example, is that Massive is an autonomous agent animation system. It’s not a particle system with cycles attached. So when you take the particle approach what you have to do is then modify the motion to fit what these particles did, which is backwards. What you want is you want the agents to be driven by realistic motion. And so where they put their feet is due to where the actions went. So it’s got all the naturalness of the original performance, and that determines where they end up going because they say, ‘I want to do this action.’

And they pick this action from their library, they perform the action, and it progresses them through the scene. That way you get very natural motion. If you try to conform the motion to what a particle did then you’re going to make compromises to the motion, and compromising the quality of the motion is not a good thing to do. No animation supervisor is gonna like you if you do that.

Another issue with Massive versus other things is the black box thing. Most crowd systems are built on what I call black box functionality. You want terrain adaptation or look at, there’s a box for it, there’s a function for it, and you’ve got parameters and you can control it. But there’s always a time where it doesn’t do what you need, and then how do you get inside? And what do you see inside if you can get inside at all?

So there have been crowd systems in the past that didn’t let you inside at all, so that functionality breaks in the shot, you’re screwed. You’re just screwed. You gotta go back to hand placement or something. I think some systems allow you to write code that goes inside those nodes, or to make your own nodes with code inside, but if you’re doing writing code during shot production you’re also screwed because the turnaround time for, the turnaround cycle for writing code is way, way bigger than what you’ve got for doing your shots. And especially when you compare to Massive where if you’ve already built your agents you should be able to do a shot easily in a day, maybe in less, maybe get a couple of iterations of a shot in a day. And in fact I was just saying before that some studios budget Massive shots as 2D shots because they’re actually so much cheaper than a typical 3D shot.

vfxblog: Over the twenty years of development, what would you say is one or more of the major changes you have made to Massive?

Stephen Regelous: One thing that pops into my mind is agent fields. Initially I was taking a pure, and I still am in a way, taking a pure artificial life approach to solving this problem. So, you know, there’s been a lot of engineering approaches to doing crowds, and you end up with engineering. You get robotic behavior or you get something that isn’t very artist friendly. But what I wanted to do is, we want to make the end result has to look natural. So if you use natural processes then you’re more likely to get an actual result.

And if the processes that you’re using are based on what happens in nature then if something goes wrong it’s probably going to go wrong in a fairly natural way instead of a glitchy way. And in fact that is the case. We’ve had lots of happy accidents. You get happy accidents with Massive all the time. And going back to that famous case of the first battle that we did where we had agents running away – and everyone ascribes that to them being too smart to fight. But actually it was because there was something that I hadn’t thought of to put in their brain, but the end result was it looked natural.

So the artificial life approach is what I started with, and so we did sensory input. They have vision, they have sound, they have an actual image of the scene generated from the point of view of each agent, and those very pixels go into their brain for processing through the fuzzy logic rules. It was very hardcore artificial life stuff so that you get natural behavior for sure.

One of the more recent big changes I made was Parts where you can actually have parts of an agent, its brain, saved in separate files. So say you’ve built a really great adaptation module in the brain, but you want all your agents to automatically get that and any changes you make to that one file. Then you can now do that using Parts. So that means that, especially big studios that have got lots of people working on their agents, and someone’s gotta work on this part of the agent, some of them gotta work on this, and they want all those different bits to all be called up when they load up the agents. That’s great, but the idea behind Parts is that you can have a library of parts that you can then just drop in and build brains in seconds instead of says or perhaps weeks to do all this brain building.

But then I had to make the Parts Library, and so I thought it was just gonna take a couple of weeks to throw together a bunch of useful parts, and then there we go I’ll get that out, but it actually turned out to be months of work to make a good parts library. And it’s in the process of doing that I discovered that this was possibly the biggest, most important change that I’ve made.

vfxblog: When all the press came out about Massive at the time of Lord of the Rings, AI was the selling point, you know, the discussed point. Has there been any other kind of AI things that you’ve noticed or developments that you would want to put into Massive?

Stephen Regelous: No, well a lot of the fuss about AI at the moment is deep learning, or more properly convolution networks. And that’s very useful. It’s basically artificial neural networks, and right when I started with Massive that was obviously an option. I could make the brains out of neural networks, but they’re not production friendly because you need a lot of training, and if you make a change that’s a lot more training. So the director can’t come in and say, ‘Oh make them pick their feet up a bit higher or make them cut corners a bit more.’ It’s like, well, then we’ve got to re-train all the networks and that’s a lot of work.

So fuzzy logic can be functionally equivalent to neural nets, but you don’t train it. You just write it, you just put together the rules yourself. And in the case of Massive it’s just dragging and dropping nodes. And so because it had to be used in production it had to be something that you can change instantly, and fuzzy logic can give you the same naturalness as neural nets but you don’t train them, you just author them as you do other assets in production.

vfxblog: Can you talk about other developments with Massive, such as, can customers do their own thing with the software via APIs etc?

Stephen Regelous: They can to some degree. We’ve got a couple of APIs. We’ve got a CC++ API which has been used for some pretty extensive stuff here and there, and there’s a Python API for more sort of pipeline-level stuff. But in general the hackability is more in the ASCII files that Massive reads and writes, because then people can do whatever they want really. So when I wrote Massive that was where most pipeline hacking was going on, was in the files that programs were sharing. And so I made the files very easy to hack. And of course Python’s good at hacking text, so you can write your own Python scripts to modify those files if you want too.

So there’s several ways to get in there and do stuff, but there is an assumption these days that because Python in Maya is really the way a lot of pipelines work these days that you need to be doing that stuff in Massive, and the flexibility of what you can do in the brains actually gets rid of about ninety percent of that requirement. So the APIs that we’ve got or hacking the text files is actually plenty for what most people need.

vfxblog: Tell me more about the new Parts library in Massive, why did you build this?

Stephen Regelous: This was the biggest wakeup call since I made Massive, you know, the fact that now you can just build an agent’s brain in seconds instead of laboriously putting nodes together for days whatever. So the parts library comes with some blank agents. They’ve got no brain but they’ve got a very simple body. In this case this is a bird agent and we want it to flock, so we drop in the Flock part which also brings in the Turn 3D part because it needs that and it knows that. And then we drop in the Follow 3D Lane part because we want this to follow 3D lanes, and it knows that it needs that and that, so they’re now loaded automatically. And we’ll also drop in a Flap part which will flap their wings when they’re going uphill basically. And so there you go, that’s less than a minute to build the brain for this agent, and now they’re all al lovely flock of birds with their flapping wings and going the direction you want.

Formation is something people often want to do and it can be a bit tricky to set up, but we’ve got a formation part so bang, you’re done. And the placement of course is easy, but keeping them in formation as they’re walking around they need to keep their relationship to the guy at the side and the guy in front, otherwise the formation just falls apart. And so we’ve also got biped terrain because there’s undulating terrain here, and action variation will get them out of sync with each other so they’re not all perfectly in sync. And head turns to give a little bit of they do this now and then. Because if there’s no sounds in the scene they’ll still look around a little bit.

So all of that takes a few seconds to put together. In fact, I timed myself making this one I think, which was twenty nine seconds, and it would have been even for me at least hours if not a day or two to build that brain, and for somebody with much less experience it could be like a week of work to create all of this stuff. Because it’s actually quite, inside of these nodes there’s quite a lot of stuff going on. But now it’s just seconds, literally twenty nine seconds to make that brain.

vfxblog: Have you considered producing Massive for any different applications, other than TV or for film, such as a phone app or a specific game or anything like that?

Stephen Regelous: Well, I do have a demo where there can be a playable game using the Parts library in like a minute – a playable game with actual joystick input. I’ve thought about trying to make a product out of that where you can actually build your own games using Massive, and I’ve had various goes at making such things and do a bit of prototyping and I’ve got a new one that I’ve just started working on, but none of these have come to fruition so far because I always get to a point where I think no, that’s not going where I want it to go. But we are talking to people about other applications all the time, and there are some things on the boil but too soon to talk about anything really.

vfxblog: Well, thanks so much for talking to me.

Stephen Regelous: Thank you.

Doesn’t it often happen that we remember the bad guys tricks more than the obedient ones characteristic? It’s always said ‘Good over Evil’ but it becomes quite interesting when we consider just the evil aspect. Negative characters and their strides always have a greater impact on us than the positive characters. The positive characters have all the good traits but negative ones have a unique aura which varies and imprints on our minds. This Friendship Day, instead of filling your kitty with all the good supposedly loved by all characters, we bring to you some classy villainous characters who have been with us since childhood.

Mojo Jojo

If you got to be a smart villain you got to be someone like Mojo Jojo. A stout green-black monkey who needs no introduction in terms of smartness. Featured in the PowerPuff Girls animated series on Cartoon Network in the late 90s, Mojo Jojo became a friend of the guys who would watch the series and prefer his character traits over the three super power protagonist girls. Frankly, it does take a lot of effort to come up schemes to destroy the world and Mojo Jojo’s acts were to some extent commendable.

Cruella de Vil

You can forget the names of the dogs but you can’t forget her. You can’t forget her acts, tributes, style, her clothes and her deadly acts to kill the black spotted white dogs. Featured in Disney’s 101 Dalmatians Cruella de Vil, as her name suggests, was the devilish friend to look upto. All her negativity would force you to be good and not be like her. Indeed, she was a friend who was awaited by kids when the dogs were all happy and gay in the animated series.

Shere Khan

If a character could make you cringe back in your bed – that was Shere Khan. The powerful roar, the scary face, his intriguing pounce from the woods, fierce paws and commanding dominance over the human kid Mowgli, Shere Khan fascinated the viewers with his evil nature. Featured in The Jungle Book in 1989, though the advent of Shere Khan in every episode created trouble to the playful Mowgli, it was much awaited by the viewers. Surprisingly, Shere Khan was the most dangerous friend of kids.

Joker

“All it takes is one bad day.” We have read it, we have heard it and we have seen it. The clown prince of crime, the sadistic psychopath, Joker who was born 75 years back by DC comics has gone out to be one of the most loved villains in the comic verse and was recently iterated by Jared Leto in Suicide Squad and by Mark Hamill in Batman: The Killing Joke. Why we love him? He’s unpredictable, unrelenting, with little or no concern for himself, because everything is a joke to him. Over the years, the character has won our heart for his uncanny attitude and somewhere deep down, we all are insane, aren’t we?

Magneto

Once a friend of Charles Xavier, Erik Lehnsherr (Magneto) can be observed as a super villain, antihero or a superhero in the Marvel comics X-Men universe. Though he and Xavier had different philosophies, both had one thing in common, to protect the mutants. That’s what makes him a true friend as it can be seen that his friendship lied with Xavier and no matter what the circumstances may be, he will protect him and work together if the situation demands him to. Also, it’s hard to not agree with Magneto sometimes as humans have committed some horrible acts against mutants in the X-Men universe.

Loki

Why Loki? Being the “God of Mischief”, he doesn’t really seem all that threatening. Right from his attire – helmet with giant horns to his dialogues, Marvel’s Loki can be termed as a funny archenemy of Thor who gets himself in comical situations. And eventually it was because of him that Avengers was formed. Loki, a master at lies, manipulation, and generally finds ways to get whatever he wants, despite his lack of brute strength, is someone who reminds us of those people in our lives who might be evil to us but in the end their act leaves us astounded.

This Friendship Day, don’t just remember your friends but also those people who might have been a rival of yours. These are the guys who teach us lessons and who with their dark, twisted personality spice up our lives. Who knows maybe those who were once your enemy have turned into your best friends

The post Celebrate Friendship Day remembering the iconic villains whom you loved appeared first on AnimationXpress.

Toyota has used the opening of the Rio 2016 Olympic Games to launch “Stand Together”, an integrated advertising campaign connecting the brand with team work. The Toyota Stand Together commercial at the heart of the campaign features people across the world, from Toyota team members to skydivers to Team Toyota athletes Sanders and Purdy joining hands and celebrating teamwork. Set to the track of The Spencer Davis Group’s “Gimme Some Lovin’,” the spot communicates Toyota’s commitment to mobility that facilitates shared experiences. The commercial also includes a call to action. For every person who creates a video of people joining hands and shares it using the campaign hashtag, #LetsJoinHands, Toyota will donate $20 to United Way, up to $250,000. In addition, Toyota will mix content from user and influencer submissions into pre-roll and social videos, continuously delivering fresh edits throughout the media run.

A prominent portion of the Toyota Stand Together campaign will be the #LetsJoinHands outdoor touchboard installations that combine live video feeds with real-time collaborative drawing tools to create a connective engagement between New York City’s Herald Square and Los Angeles’ Hollywood and Highland from August 12-14. Users have the opportunity to email or tweet video captures of their drawing sessions directly from the screens. An advanced cloud-based rendering engine automatically generates highlight reels featuring users’ digital drawings, suited to social sharing. The touchboards aim to capture the magic of the engagement while immortalizing these fleeting, yet genuine connections and collaborations.

“Teamwork is ingrained in Toyota’s DNA – it’s a founding principle,” said Jack Hollis, group vice president of marketing, Toyota Motor Sales, U.S.A. “We believe, as our founder did, in the ‘power of togetherness’ and athletics is an amazing example of the power of teamwork. While we do not compete at the highest levels of sport on the playing field, we celebrate the spirit of collaboration every day, whether it is within the walls of Toyota, or in our interaction with our guests.”

“Toyota is a brand that is founded on the power of movement and over the decades has enabled people to move forward and explore. The “Stand Together” campaign is a reflection of the physical and emotional places we can go and the goals we can achieve together. By giving people a chance to engage with technology and people in unexpected ways, the campaign extends and redefines what it means to join hands and we hope to start a conversation around teamwork,” said Fabio Costa, executive creative director, Saatchi LA.

The Toyota Stand Together campaign was developed at Saatchi & Saatchi Los Angeles by chief creative officer Jason Schragger, executive creative director Fabio Costa, creative directors Leo Circo and Chris Pierantozzi, associate creative directors/art directors Kevin Schroeder and Jeremy Carson, associate creative directors/copywriters Randy Quan and Dan Sorgen, executive director, integrated production & operations Lalita Koehler, director of content Sara Seibert, executive content producer Pamela Parsons, senior content producer Jennifer Vogtmann, content producer Marina Korzon, executive digital producer Tarrah Barbour, associate producer Kat Olschnegger, director of business affairs Keli Christy, business affairs manager Erin D’Angelo, associate project manager Angela Montoya, group account directors Steve Sluk and Bryan DeSena, account director Patrick Young, account supervisor Joshua Phillips, account executive Brad Sanders, assistant account executives Kate Jensen and Natalie Johnson, social engagement director Allie Burrow, PR director Mike Cooperman, group planning director Evan Ferrari, senior planners Julienne Lin and Shaunt Halebian, executive communications director John Lisko, paid media director Chris Nicholls, earned media director Romina Bongiovanni, associate director of social media Karen Ram, social media supervisor Talia DiDomenica, media supervisor Helen Burdett and Sarah Buchalter, media planner Mitchell Gilbert, social media assistant Patrick Butcher, Toyota USA group vice president marketing Jack Hollis, marketing communications VP Cooper Ericksen, corporate manager Jim Mooney, corporate manager Scott Thompson, brand communications manager Russ Koble, national manager Mia Phillips, brand, multicultural and crossline mrketing strategy Mia Phillips, brand & multicultural manager Landy Joe, social media marketing manager Florence Drakton.

Filming was produced at Anonymous Content by director Chris Sargent, director of photography Andre Chemetoff, senior executive producer Gina Zapata, executive producer Sue Ellen Clair, head of production Kerry Haynie, line producer Brian Eating.

Editor was Stewart Reeves at Work Post with executive producer Marlo Baird, producer Tita Poe, assistant editor Brian Meagher.

Visual effects were produced at A52 by VFX supervisor Andy McKenna, CG supervisor Andy Wilkoff, 2D artists Cameron Coombs, Michael Vagilenty, Adam Flynn, Rod Basham, Gavin Camp, Michael Plescia, finishing artists Kevin Stokes and Gabe Sanchez, matte painters Dark Hoffman and Marissa Krupen, 3D artists Tom Briggs, Dustin Mellum, Kirk Shintani, Josh Dyer, Jon Balcome, Manny Guizar, Michael Cardenas, Michael Bettinardi, Joseph Chichi, animator Earl Burnley, producer Stacy Kessler-Aungst, head Of production Kim B. Christensen, executive producer Patrick Nugent, colourist Paul Yacono.

Sound was designed at Barking Owl and mixed at Formosa Group by Peter Rincon.

Music is “Gimme Some Lovin’” by The Spencer Davis Group, written by Steve Winwood, supervised at Saatchi & Saatchi LA by Kristen Hosack.

Nike opened its “Unlimited” campaign with “Unlimited Future”, a commercial set in a baby nursery. The ad opens to the faint music of Chopin’s Berceuse, Op. 57, playing from a plastic radio. on a nursery full of future sports stars, including diaper-clad LeBron James, Serena Williams, Neymar Jr, Zhou Qi and Mo Farah. Actor Bobby Cannavale enters dramatically. “Listen up, babies! Life’s not fair. You get no say in the world you’re born into. You don’t decide your name. You don’t decide where you come from. You don’t decide if you have a place to call home, or if your whole family has to leave the country. (Yeah, it’s messed up.) You don’t decide how the world judges a person like you. You don’t decide how your story begins. But you do get to decide how it ends. Yes!” The YouTube version ends with the rain tapping on the window, and a baby fist lifted high above the crib.

The Nike Unlimited Future film was developed at Wieden + Kennedy, Portland, by global creative directors Alberto Ponte and Ryan O’Rourke, interactive director Dan Viens, executive producer Matt Hunnicutt, copywriter Josh Bogdan, art director Pedro Izique, agency producer Erin Goodsell, digital producers Patrick Marzullo, Keith Rice, strategic planners Andy Lindblade, Nathan Goldberg, Reid Schilperoort, media and communications planning team Danny Sheniak, John Furnari, Brian Goldstein, Jocelyn Reist, account team Chris Willingham, Alyssa Ramsey, Corey Woodson, Anna Boteva, Carly Williamson, working with Nike marketing team Ean Lensch, YinMei San, Amber Rushton.

Filming was shot by director Damien Chazelle via Superprime with executive producer Rebecca Skinner, head of production Roger Zorovich, line producer William Green, director of photography Rodrigo Prieto, production designer Melanie Jones, with Dan Bell Casting.

Editor was Eric Zumbrunnen at Exile with assistant editor Dusten Silverman, post producer Brittany Carson, executive post producer Carol Lynn Weaver, head of production Jennifer Locke.

Visual effects were produced at The Mill by 2D lead artist Adam Lambert, 2D artists Joy Tiernan, Jason Bergman, matte painter Rasha Shalaby, VFX producer Alex Bader, VFX coordinator Samantha Hernandez, executive VFX producer Enca Kaul, shoot supervisors Phil Crowe and Tim Rudgard, colourist Adam Scott, executive producer Thatcher Peterson and color producer Diane Valera.

Sound was designed at Barking Owl by sound designer Michael Anastasi, creative director Kelly Fuller Bayett, producer Ashley Benton. Sound was mixed at Lime Studios by mixer Rohan Young, audio assistant Ben Tomastik and executive producer Susie Boyajan.

Music composed by John Nau was produced at Beacon Street by executive producer Adrea Lavezzoli. Music, “Berceuse, Op. 57”, by Frédéric Chopin, was produced at Walker Music by arranger McKenzie Stubbert, executive producer Sara Matarazzo, senior producer Abbey Hickman, music coordinators Jacob Piontek and Marissa Hernandez. “Kicking Down Doors” by Santigold was supervised at Walker.

Just say no to vertical videos- https://youtu.be/Bt9zSfinwFA

*Vertical video made slightly less terrible by The Vertical Vigilante. Saving the Internet, one vertical video at a time.