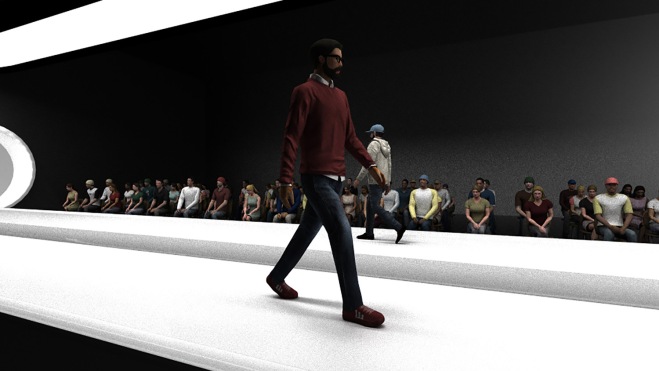

When Massive (Multiple Agent Simulation System in Virtual Environment) was first revealed to the world in 2001 as part of the release of Peter Jackson’s Lord of the Rings films, it stunned audiences – and the visual effects community – with its use of fuzzy logic to aid in crowd animations. Developed at Weta Digital by Stephen Regelous, Massive then became a product on its own. Regelous has continued to build and grow Massive and recently at SIGGRAPH he showed off a new Parts Library and the new 3ds Max integration. vfxblog sat down for a demo of the latest software and a chat with Regelous about the state of AI and where Massive is ‘at’, particularly in an environment with new crowd simulation competitors and perhaps new opportunities away from film and TV.

vfxblog: What does the code base or the software of Massive look like compared to when you wrote it for Lord of the Rings?

Stephen Regelous: It’s exactly the same. I mean, it was a couple hundred thousand lines of code when I’d finished at Weta, and it’s now about half a million lines of code but that couple hundred thousand lines of code is still in there.

vfxblog: So the idea of this brain and crowd agents and everything like that is still, that’s the central tenet?

Stephen Regelous: It’s exactly the same, in fact some, we often get questions like, ‘So, when you finished with Lord of the Rings how did you deal with this whole new thing?’ It wasn’t a new thing because I’d had that in mind right from the start. Even when we were at Weta and I’d got the simulations working and then it was time to start looking at how we were going to do shots with it, the management was saying, ‘Okay well now we’ve got to figure out what the UI is for doing shots?’ I said, ‘Here it is.’ Because I’d done that right from the start. I was planning for it to be used in production, I was planning for it to then go on to other studios, so that was always there.

vfxblog: What were people doing, do you remember, before crowd simulation software became popular?

Stephen Regelous: Well, hand animation was always an option, and believe it or not it’s still used to this day. But the more common option for crowds was to do a particle animation and then either stick cards on them, which was not really the favored option, or to stick pre-baked cycles onto it.

vfxblog: There’s now several competitors to Massive in the crowd simulation space – what do you think are Massive’s strengths compared to some of the other tools?

Stephen Regelous: Well, the biggest difference, certainly between Massive and Golaem, for example, is that Massive is an autonomous agent animation system. It’s not a particle system with cycles attached. So when you take the particle approach what you have to do is then modify the motion to fit what these particles did, which is backwards. What you want is you want the agents to be driven by realistic motion. And so where they put their feet is due to where the actions went. So it’s got all the naturalness of the original performance, and that determines where they end up going because they say, ‘I want to do this action.’

And they pick this action from their library, they perform the action, and it progresses them through the scene. That way you get very natural motion. If you try to conform the motion to what a particle did then you’re going to make compromises to the motion, and compromising the quality of the motion is not a good thing to do. No animation supervisor is gonna like you if you do that.

Another issue with Massive versus other things is the black box thing. Most crowd systems are built on what I call black box functionality. You want terrain adaptation or look at, there’s a box for it, there’s a function for it, and you’ve got parameters and you can control it. But there’s always a time where it doesn’t do what you need, and then how do you get inside? And what do you see inside if you can get inside at all?

So there have been crowd systems in the past that didn’t let you inside at all, so that functionality breaks in the shot, you’re screwed. You’re just screwed. You gotta go back to hand placement or something. I think some systems allow you to write code that goes inside those nodes, or to make your own nodes with code inside, but if you’re doing writing code during shot production you’re also screwed because the turnaround time for, the turnaround cycle for writing code is way, way bigger than what you’ve got for doing your shots. And especially when you compare to Massive where if you’ve already built your agents you should be able to do a shot easily in a day, maybe in less, maybe get a couple of iterations of a shot in a day. And in fact I was just saying before that some studios budget Massive shots as 2D shots because they’re actually so much cheaper than a typical 3D shot.

vfxblog: Over the twenty years of development, what would you say is one or more of the major changes you have made to Massive?

Stephen Regelous: One thing that pops into my mind is agent fields. Initially I was taking a pure, and I still am in a way, taking a pure artificial life approach to solving this problem. So, you know, there’s been a lot of engineering approaches to doing crowds, and you end up with engineering. You get robotic behavior or you get something that isn’t very artist friendly. But what I wanted to do is, we want to make the end result has to look natural. So if you use natural processes then you’re more likely to get an actual result.

And if the processes that you’re using are based on what happens in nature then if something goes wrong it’s probably going to go wrong in a fairly natural way instead of a glitchy way. And in fact that is the case. We’ve had lots of happy accidents. You get happy accidents with Massive all the time. And going back to that famous case of the first battle that we did where we had agents running away – and everyone ascribes that to them being too smart to fight. But actually it was because there was something that I hadn’t thought of to put in their brain, but the end result was it looked natural.

So the artificial life approach is what I started with, and so we did sensory input. They have vision, they have sound, they have an actual image of the scene generated from the point of view of each agent, and those very pixels go into their brain for processing through the fuzzy logic rules. It was very hardcore artificial life stuff so that you get natural behavior for sure.

One of the more recent big changes I made was Parts where you can actually have parts of an agent, its brain, saved in separate files. So say you’ve built a really great adaptation module in the brain, but you want all your agents to automatically get that and any changes you make to that one file. Then you can now do that using Parts. So that means that, especially big studios that have got lots of people working on their agents, and someone’s gotta work on this part of the agent, some of them gotta work on this, and they want all those different bits to all be called up when they load up the agents. That’s great, but the idea behind Parts is that you can have a library of parts that you can then just drop in and build brains in seconds instead of says or perhaps weeks to do all this brain building.

But then I had to make the Parts Library, and so I thought it was just gonna take a couple of weeks to throw together a bunch of useful parts, and then there we go I’ll get that out, but it actually turned out to be months of work to make a good parts library. And it’s in the process of doing that I discovered that this was possibly the biggest, most important change that I’ve made.

vfxblog: When all the press came out about Massive at the time of Lord of the Rings, AI was the selling point, you know, the discussed point. Has there been any other kind of AI things that you’ve noticed or developments that you would want to put into Massive?

Stephen Regelous: No, well a lot of the fuss about AI at the moment is deep learning, or more properly convolution networks. And that’s very useful. It’s basically artificial neural networks, and right when I started with Massive that was obviously an option. I could make the brains out of neural networks, but they’re not production friendly because you need a lot of training, and if you make a change that’s a lot more training. So the director can’t come in and say, ‘Oh make them pick their feet up a bit higher or make them cut corners a bit more.’ It’s like, well, then we’ve got to re-train all the networks and that’s a lot of work.

So fuzzy logic can be functionally equivalent to neural nets, but you don’t train it. You just write it, you just put together the rules yourself. And in the case of Massive it’s just dragging and dropping nodes. And so because it had to be used in production it had to be something that you can change instantly, and fuzzy logic can give you the same naturalness as neural nets but you don’t train them, you just author them as you do other assets in production.

vfxblog: Can you talk about other developments with Massive, such as, can customers do their own thing with the software via APIs etc?

Stephen Regelous: They can to some degree. We’ve got a couple of APIs. We’ve got a CC++ API which has been used for some pretty extensive stuff here and there, and there’s a Python API for more sort of pipeline-level stuff. But in general the hackability is more in the ASCII files that Massive reads and writes, because then people can do whatever they want really. So when I wrote Massive that was where most pipeline hacking was going on, was in the files that programs were sharing. And so I made the files very easy to hack. And of course Python’s good at hacking text, so you can write your own Python scripts to modify those files if you want too.

So there’s several ways to get in there and do stuff, but there is an assumption these days that because Python in Maya is really the way a lot of pipelines work these days that you need to be doing that stuff in Massive, and the flexibility of what you can do in the brains actually gets rid of about ninety percent of that requirement. So the APIs that we’ve got or hacking the text files is actually plenty for what most people need.

vfxblog: Tell me more about the new Parts library in Massive, why did you build this?

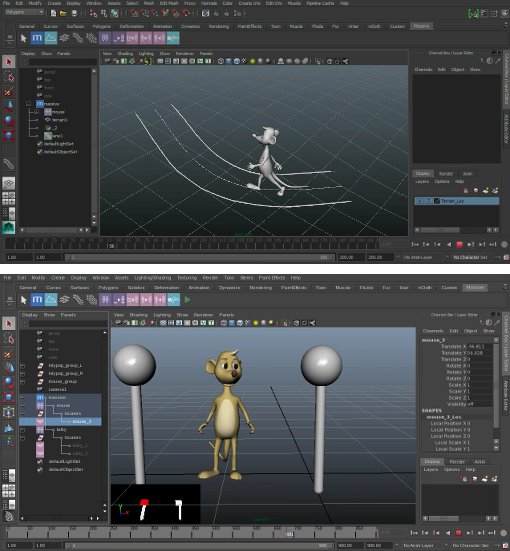

Stephen Regelous: This was the biggest wakeup call since I made Massive, you know, the fact that now you can just build an agent’s brain in seconds instead of laboriously putting nodes together for days whatever. So the parts library comes with some blank agents. They’ve got no brain but they’ve got a very simple body. In this case this is a bird agent and we want it to flock, so we drop in the Flock part which also brings in the Turn 3D part because it needs that and it knows that. And then we drop in the Follow 3D Lane part because we want this to follow 3D lanes, and it knows that it needs that and that, so they’re now loaded automatically. And we’ll also drop in a Flap part which will flap their wings when they’re going uphill basically. And so there you go, that’s less than a minute to build the brain for this agent, and now they’re all al lovely flock of birds with their flapping wings and going the direction you want.

Formation is something people often want to do and it can be a bit tricky to set up, but we’ve got a formation part so bang, you’re done. And the placement of course is easy, but keeping them in formation as they’re walking around they need to keep their relationship to the guy at the side and the guy in front, otherwise the formation just falls apart. And so we’ve also got biped terrain because there’s undulating terrain here, and action variation will get them out of sync with each other so they’re not all perfectly in sync. And head turns to give a little bit of they do this now and then. Because if there’s no sounds in the scene they’ll still look around a little bit.

So all of that takes a few seconds to put together. In fact, I timed myself making this one I think, which was twenty nine seconds, and it would have been even for me at least hours if not a day or two to build that brain, and for somebody with much less experience it could be like a week of work to create all of this stuff. Because it’s actually quite, inside of these nodes there’s quite a lot of stuff going on. But now it’s just seconds, literally twenty nine seconds to make that brain.

vfxblog: Have you considered producing Massive for any different applications, other than TV or for film, such as a phone app or a specific game or anything like that?

Stephen Regelous: Well, I do have a demo where there can be a playable game using the Parts library in like a minute – a playable game with actual joystick input. I’ve thought about trying to make a product out of that where you can actually build your own games using Massive, and I’ve had various goes at making such things and do a bit of prototyping and I’ve got a new one that I’ve just started working on, but none of these have come to fruition so far because I always get to a point where I think no, that’s not going where I want it to go. But we are talking to people about other applications all the time, and there are some things on the boil but too soon to talk about anything really.

vfxblog: Well, thanks so much for talking to me.

Stephen Regelous: Thank you.

Post a Comment