Under the artful watch of visual and digital effects supervisors Volker Engel, Doug Smith and Tricia Ashford, a crack team of in-house effects artists and several post houses carried out the significant CG, matte painting and compositing duties for Independence Day. Among those was VisionArt, which came onto the production late in the game to help produce dogfight sequences, Alien Attacker shield effects and the final Mothership explosion. Faced with next to no time on these shots, artists at the studio capitalized on Side Effects’ Prisms (pre-Houdini) and developed its own procedural systems, in particular a tool called ‘Sparky’, to deliver the effects on time. In Part 2 of our retro ID4 coverage (Part 1 looked at the Model Shop), vfxblog talks to two VisionArt artists on Independence Day, Rob Bredow, now CTO at Lucasfilm, and Daniel Kramer, now a visual effects supervisor at Sony Pictures Imageworks.

vfxblog: VisionArt’s work in ID4 holds up so well – and even now appears daunting – is that how it felt at the time? What was the feeling like going into this major show especially given how late you came on board?

Rob Bredow: It’s one of those rare movies that gets a lot of repeat viewings and is always a fun ride. Honestly, we saw the ID4 Super Bowl commercial (along with the rest of the world) and our producer, Josh Rose, reached out to congratulate the VFX team on the great work in the teaser. A few days later I think they worked out a deal where ID4 “bought out” our entire shop for the next four months and we dove into getting as much work done as we could in the remaining time. Before we were done, I think our small team had contributed more than half of the CG in the film and helped with a lot of compositing as well.

Daniel Kramer: It was certainly a step up for us at VisionArt and for me personally. Up to that point I had solely worked on television vfx projects and main titles for films. ID4 was my first film project working on vfx. Surprisingly I don’t remember ever feeling overwhelmed by it, I only remember feeling really excited to be apart of it. Being young and foolish probably helped.

vfxblog: What were the major technical challenges you felt VisionArt needed to overcome for the show?

Rob Bredow: We had a few different areas to dive into. The Alien Attacker shield effect was heavily featured in the movie and had already gone through a lot of iterations even before we were onboard but hadn’t gotten a creative sign off yet. There was also the end sequence featuring the Alien Mothership explosion. The most fun, and challenging, task for me was all of the ships featured in the dogfights throughout the movie where the filmmakers wanted to see hundreds of planes in the air. My understanding is that before VisionArt was brought on board, most of this work was being done by animating the planes one by one and was very time consuming so I built a procedural system to animate and render those ships.

vfxblog: Can you talk about getting started with animating the F-18s and alien ships – how were models built in terms of reference/scans and how closely did you feel these matched to the real thing and miniatures?

Rob Bredow: This was definitely squarely in the era where there was plenty of debate about what could be done with computer graphics as opposed to models and miniatures. CG wasn’t new, but it wasn’t the natural solution for most shots yet. One of the great things about this film was that it had a ton of huge miniature shoots that not only provided great reference, but also set the tone of the look of the film.

Daniel Kramer: Since we came in so late in the game a lot of work had already been done by the in-house VFX unit assembled for ID4. They had models and textures for both the F-18s and the Alien Attackers built in Alias. Carl Hooper and I converted those assets over to Side Effects Software’s Prisms and re-built them for our pipeline. Others were involved in converting over various assets, Dorene Haver, Bethany Shackelford, Pete Shinners. The models and textures were very simple by today’s standards but the filmmakers were careful to use practical models whenever possible for hero shots. I believe we did tests early on to ensure our digital models matched up to what the in-house team had built in Alias.

The animation was pretty simple, there aren’t a lot of moving parts on an F-18. We hand animated shots were there were a handful of cg ships. I don’t remember using any tools to help us with the physics for those sorts of shots. Each of us took on various hero shots including myself, Carl Hooper, Pete Shinners, Rob Bredow, Dorene Haver, Vinh Lee, Barry Safley.

For F-18 missile trails we used both smoke textured particle spheres rendered in Mantra and our in-house sprite renderer “Sparky”. Sparky was both a particle simulation tool and a GL sprite renderer (I think this was before we adopted OpenGL). Sparky was closely modeled after Prisms allowing you to build up a stack of procedural operators to drive simulation and rendering. It pre-dated POP’s in Houdini but resembled that workflow. We couldn’t render true volumes or self shadows but we could shape the trails by manipulating the normals of the underlying points and by driving point colors on the sprites. Our sprites could be more than just cards, we’d generally create custom geometry and vary the point normals and colors across the geometry for more complexity. All pretty crude by today’s standards but very similar to a lot of realtime game techniques. Rob Bredow was the main developer of Sparky with others extending it over the years at VisionArt.

vfxblog: Can you talk more about the ‘Sparky’ toolset?

Rob Bredow: Sparky was a procedural animation system that Pete Shinners and I initially wrote. It had a particle “cooking” system that consisted of a list of nodes that were linked together in any order. It also had a rendering subsystem which used OpenGL to draw particles into a buffer that could then be written to disk. Sparky was comparable in its feature set to Dynamation, but using a list of nodes instead of expressions to drive most of the animation.

For Independence Day, Sparky required extensive enhancements to deliver the hundreds of ships dogfighting in the sky. The graphics buffers only had 1024 pixels across so we had to tile the rendering into 4 tiles and stitch the buffers back together on disk to render the 2k frames for the film. We also rendered separate alpha passes for the composite artists. Prior to ID4, Sparky only knew how to render particles so I added geometry rendering (with a simple baked down texture per asset) which made it possible to render the ships and missiles. I used a variation on that system to do a lo-res animation of the shield effect for the sequences where the Alien Attackers still had their shields intact. (Spoiler alert?)

The biggest development came in how I setup the particle system to simulate the dogfight itself. There were different groups of particles (or objects in Sparky) for each role in the dogfight. An example role would be an “offensive” F/A-18 fighter. In this role, the F/A-18 would be attracted to the nearest Alien Attacker and give chase. If they were close enough and had a reasonable shot, they’d fire off a missile which was another object that had a different turning ability and speed and would of course emit its own trail of smoke. If the missile got close enough to the Alien Attacker, it would explode (triggering another set of particles) which would damage the attacker (triggering another set of fire and smoke particles) or trigger the shield effect depending on the sequence in the film.

Both the F/A-18’s and the Alien Attackers had their own characteristic flight patterns and the roles for the offensive and defensive ships had some variations. In addition, the Alien Attackers didn’t have guided weapons but could shoot their blasts more frequently.

Once the system was setup, it was a simple matter of setting a long pre-roll so we’d have a nice history of smoke trails in the air from the ongoing dogfight and rendering out the shot. It would take a few seconds per frame, most of that time was spent saving the image. If we didn’t like it once it was rendered, I could easily tweak the starting conditions by increasing the count of Alien Attackers or F/A-18’s and determining whether more should be on offense or defense. Or, if we liked everything but one or two ships, I had a way to delete a vehicle by ID to keep it from ruining an otherwise perfectly good shot.

Looking back, I would have to say it was a pretty satisfying project—at least in hindsight. In the moment, it was pretty stressful to be developing Sparky and trying to do final elements for nearly 100 shots on a pretty tight schedule. I remember one night around 3am looking for a crashing bug in the software and wondering how I was ever going to get all my shots done.

vfxblog: What were the challenges you faced in generating the background F-18s and other ships and elements? Can you talk about how ‘Sparky’ was used here?

Daniel Kramer: The sheer number of shots, ships and missile trails required for the wide battles was a huge challenge. While many of us were taking on the hero shots with a handful of ships, Rob Bredow was writing new operators into Sparky to handle the huge crowd simulations needed to pull off those shots. Rob would be able to talk in more detail how these were handled but here’s what I remember. He built particle operators for a ‘follow the leader’ behavior allowing some ships to chase other ships. He built in rules for a ship’s speed, turning radius, banking behavior etc. When chasing ships were within a certain radius of the leader it would trigger a missile fire event and a hit event. All of the behaviors were programed into this custom made crowd simulator. Ships were then instanced to each particle and rendered in hardware on an SGI Octane. I don’t think there was enough power to actually instance the ship geometry, I believe most of the ships were sprites with normal maps (at least the very distant ones). We rendered an array of ship views into a large sprite sheet from all possible angles and Sparky was programmed to chose the right sprite given the ship’s direction and angle to camera. For large battles almost everything was a sprite including missile trails and explosions. A pretty awesome system for it’s time.

vfxblog: What were the tools used for the animation, and also how did you then approach lighting and rendering for the ships? How was compositing handled back then?

Daniel Kramer: For hero shots we used Prisms for animation and lighting, Mantra for rendering. I believe we used ICE for compositing…maybe Chalice? ICE was a standalone compositor by SideFx and Chalice was a spinoff of ICE which was more tailored to film work. We used both at VisionArt at various times but the timeline is a bit foggy. Prisms was the precursor to Houdini and had a very different UI look and workflow, but the ICE UI was actually quite similar to the very first versions of Houdini and I’m sure they shared the same UI kit.

Generally each artist at VisionArt handled shots from start to finish. If you were assigned a shot you handled animation, fx simulations, lighting and compositing. Working that way for so many years really developed my understanding of the whole process and fostered a great sense of ownership for the shots I worked on. We had a few specialists for paint work for example. Over time some of the artists naturally gravitated to a more specialist role depending on what they were good at.

Rob Bredow: Most of the composites we did during that era at VisionArt were completed in ICE (part of the Prisms package). It was a node-based compositor that we used for a lot for both pre-comp and final comp work as well. Dorene Haver led our compositing work and she may have also being using an early version of Silicon Grail’s Chalice (RFX). We were also in the early days of evaluating Kodak’s Cineon compositing system and it’s possible Dorene comped some shots in Cineon as well.

Due to the workload, the small size of our VisionArt crew, and the short time frame we generated a lot of elements that we handed off to other facilities to composite. This was particularly true for many of the dogfight elements that I worked on. Today, I still run into artists and supervisors around town who comped my elements during Independence Day.

vfxblog: The ship shield effects were so innovative – can you talk about the various elements that went into them and how they were realized? How was Prisms used here, for example?

Rob Bredow: Carl Hooper, Dan Kramer and I teamed up on those effects. The hero effect was rendering in Prisms using Mantra. The shape of the shields was created in geometry and then distorted using procedural ripples with Prisms Surface Operators (SOPs). My role was to create a series of animated textures that Carl used to overlap at the impact point so that you’d see the energy from the impact shoot out along the surface of the shield. We did a lot of variations of the look combining various techniques before settling on the look you see in the film today.

vfxblog: What kind of planning went into the look of the Mothership explosion – again, it was really distinctive.

Rob Bredow: Thank you. I still remember the shot name for the wide Mothership explosion shot: 389×1. That may have been because of the number of iterations we went through to find the final look of the shot for the film.

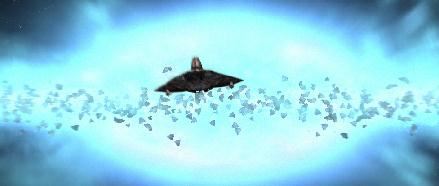

We started with a few different storyboard variations and some notes from Roland Emmerich about what coloring he was looking for and how it needed to have a lot of energy and feel unique. From there, we started building elements and layering them together. I rendered all of the particles in Sparky and some of the geometry as well. Dan Kramer led the follow-up side shot design which used some similar elements and had the debris field lighting up with fire to imply they were entering the atmosphere.

Daniel Kramer: I believe these shots were shared between myself, Rob Bredow, and Carl Hooper using a combination of Prisms and Sparky. Bethany Shackelford painted textures for us and Dorene Haver took on some of our harder composites.

The first shot of the ship exploding was mostly handled by Rob and Carl, with Rob providing a lot of the fx elements though Sparky and Carl working with the hard surface animation and lighting. Rob was the most familiar with getting the most out of Sparky and we’d often pair up with him for those elements.

I mainly focused on the Alien Attacker re-entry shot, it’s the side view of with the flaming debris field behind it. I built the chunks of debris procedurally in Prisms using various noise functions and instanced those to a particle simulation creating the debris field. We didn’t have a good solution for fire so I came up with a technique using sprites. I wrapped each chunk of debris in a deforming grid driven by a mass-spring deformer in Prisms, a crude cloth simulation. So each chunk had flapping cloth trailing behind it. Then I texture-mapped moving fire footage onto those grids. Supporting that with sparks and simple smoke was fairly convincing for the time. Rob provided a lot of the energy fx just behind the hard surface debris.

vfxblog: How was the debris field from the Mothership and its animation handled?

Rob Bredow: We procedurally modeled a wide variety of debris with fairly straightforward techniques. Since the debris was to indicate the various “shells” of the ship, the pieces started as simple extrusions of a bunch of random shapes and then we added more procedural detail as needed.

From there, we attached the pieces to a particle simulation and adjusted the speeds until we had something dynamic. The chunks were attached and rendered in Sparky along with their trailing plasma for the first wide explosion shot. There were a lot of layers in there with the fastest debris heading out in a ring towards the camera, and other layers making more of a traditional spherical explosion shape behind.

The “white out” effect does a decent job looking like an over-exposure despite it’s simplicity. All of the plasma was rendered by adding the various sprite layers together. The white out effect you see is simply clipping. If I got to redo that shot today, I’d use a more sophisticated color space and floating point math to get a more filmic look and avoid the clipping artifacts as it maxes out each of the individual RGB channels.

vfxblog: Can you talk about how, at the time, shots were finaled and reviewed, and submitted to the overall VFX supervisor and production?

Rob Bredow: There were a few different approaches used on the film. Somewhat famously, the VFX Supervision team rented a RV and drove around the Westside of Los Angeles from VFX house to house. Our Internet connections were not fast enough in that era for us to send reviews in digitally, so these in-person visits gave the supervisors the chance to look over the shoulder of the artists working on the shots. I remember many late nights writing software and collaborating with Volker Engel, Doug Smith, and Tricia Ashford – the supervisors on the show – trying to solve problems as they’d come up.

Once a shot was further along in it’s production, it was filmed out (as a WIP or “for final”) and screened for Roland. Sometimes we would attend these VFX dailies in person, but often we’d be busy working and get the notes by fax.

I still remember the day I had a great version of 389×1 I was hoping to final and the notes came back on the FAX, “Plan for a few all nighters starting tonight on this one.” Since I’d already been up for the last two nights, I took that as an opportunity to go home, get some sleep and return to the office when the RV showed up that afternoon to get working again.

vfxblog: What did you think it was that made many of these VisionArt shots so successful? What was most challenging in terms of workflow/creative control at the time?

Daniel Kramer: For the most part shots were well planned as far as what would be practical and what would be CG. Volker Engel shot plates for the most hero F18 shots and cotton clouds for backgrounds. There was a healthy amount of caution at the time about getting too close to CG as our shading models were pretty simplistic and practical models ruled the day. So a big part of it was good planning on the production side.

A big challenge for us was the timeline, we were brought into the project fairly late in the game. We were well suited for that sort of deadline having worked on a lot of television projects like Star Trek: Deep Space Nine. We were used to building CG ships and producing work on a 2 week schedule for each episode. That trained us to work fast and it was common for us to develop new tools for each new project on short schedules.

vfxblog: Any other particular memories from working on ID4 and VisionArt in general?

Rob Bredow: I look back with very fond memories at my time on Independence Day and VisionArt. So many of the talented artists there have gone on to become great supervisors and create memorable shots in films for many years. I feel very fortunate to have had the experience of working on that film and for it to be enjoyed by so many people over the years has been particularly rewarding. It’s one of three movie posters on my wall beside me right now.

When ID4 won the Academy Award that year for Best Visual Effects, we were all at the office watching together. We couldn’t believe it, and I don’t think I even realized at the time what a unique run we just had.

Daniel Kramer: Honestly they were some of the best years of my life. The feeling of optimism and opportunity. The feeling we could do anything we put our minds to. I was working with my best friends on amazing projects and VisionArt was like a second family.

The week before the opening the entire company chartered a private boat to Catalina for a few days. The day we returned we all attended opening day in Westwood to watch the movie with an audience. It was truly an exciting time.

Jump to Part 1 which looks at ID4’s Model Shop and features an exclusive video.

Post a Comment